Can Interquartile Range Be Negative

Probability is the relative frequency over an infinite number of trials.

For example, the probability of a coin landing on heads is .5, pregnant that if you flip the coin an infinite number of times, it will land on heads half the fourth dimension.

Since doing something an infinite number of times is impossible, relative frequency is often used as an estimate of probability. If y'all flip a coin 1000 times and get 507 heads, the relative frequency, .507, is a adept estimate of the probability.

Chi-foursquare goodness of fit tests are often used in genetics. One mutual application is to check if two genes are linked (i.e., if the assortment is independent). When genes are linked, the allele inherited for i gene affects the allele inherited for some other gene.

Suppose that you want to know if the genes for pea texture (R = circular, r = wrinkled) and color (Y = yellow, y = green) are linked. You lot perform a dihybrid cantankerous between ii heterozygous (RY / ry) pea plants. The hypotheses you're testing with your experiment are:

- Zippo hypothesis (H 0): The population of offspring take an equal probability of inheriting all possible genotypic combinations.

- This would suggest that the genes are unlinked.

- Culling hypothesis (H a): The population of offspring practise not take an equal probability of inheriting all possible genotypic combinations.

- This would suggest that the genes are linked.

You find 100 peas:

- 78 circular and yellow peas

- six round and light-green peas

- 4 wrinkled and yellow peas

- 12 wrinkled and dark-green peas

Pace 1: Calculate the expected frequencies

To calculate the expected values, you tin make a Punnett square. If the two genes are unlinked, the probability of each genotypic combination is equal.

| RY | ry | Ry | rY | |

| RY | RRYY | RrYy | RRYy | RrYY |

| ry | RrYy | rryy | Rryy | rrYy |

| Ry | RRYy | Rryy | RRyy | RrYy |

| rY | RrYY | rrYy | RrYy | rrYY |

The expected phenotypic ratios are therefore 9 round and yellow: iii round and green: 3 wrinkled and yellow: 1 wrinkled and dark-green.

From this, y'all can calculate the expected phenotypic frequencies for 100 peas:

| Phenotype | Observed | Expected |

| Round and yellow | 78 | 100 * (9/16) = 56.25 |

| Round and light-green | 6 | 100 * (iii/16) = 18.75 |

| Wrinkled and yellow | 4 | 100 * (3/sixteen) = 18.75 |

| Wrinkled and light-green | 12 | 100 * (1/16) = 6.21 |

Step ii: Summate chi-square

| Phenotype | Observed | Expected | O − E | ( O − Due east ) two | ( O − E ) 2 / E |

| Round and yellow | 78 | 56.25 | 21.75 | 473.06 | 8.41 |

| Circular and green | 6 | xviii.75 | −12.75 | 162.56 | 8.67 |

| Wrinkled and yellow | four | 18.75 | −14.75 | 217.56 | eleven.6 |

| Wrinkled and green | 12 | 6.21 | 5.79 | 33.52 | 5.4 |

Χ2 = 8.41 + viii.67 + eleven.6 + five.4 = 34.08

Step 3: Detect the critical chi-square value

Since there are 4 groups (circular and xanthous, circular and green, wrinkled and xanthous, wrinkled and green), there are three degrees of liberty.

For a exam of significance at α = .05 and df = iii, the Χ2 critical value is 7.82.

Stride 4: Compare the chi-foursquare value to the critical value

Χ2 = 34.08

Critical value = 7.82

The Χ2 value is greater than the disquisitional value.

Step five: Determine whether the reject the goose egg hypothesis

The Χ2 value is greater than the critical value, so we reject the null hypothesis that the population of offspring accept an equal probability of inheriting all possible genotypic combinations. There is a significant departure between the observed and expected genotypic frequencies (p < .05).

The data supports the alternative hypothesis that the offspring do not have an equal probability of inheriting all possible genotypic combinations, which suggests that the genes are linked

Y'all can use the quantile() role to detect quartiles in R. If your information is called "information", then "quantile(data, prob=c(.25,.5,.75), type=1)" volition return the iii quartiles.

Yous can use the QUARTILE() function to detect quartiles in Excel. If your data is in column A, then click any blank cell and type "=QUARTILE(A:A,1)" for the start quartile, "=QUARTILE(A:A,2)" for the 2nd quartile, and "=QUARTILE(A:A,iii)" for the third quartile.

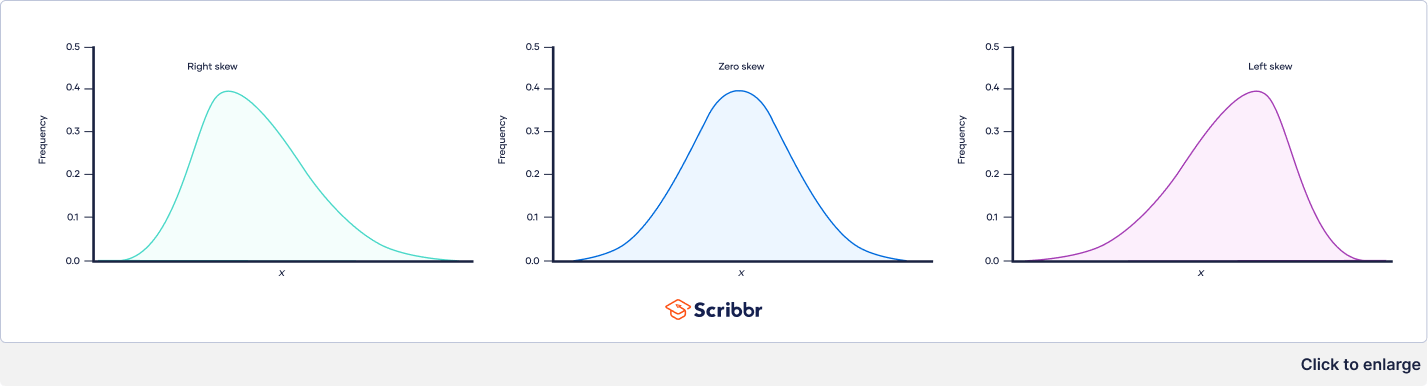

The iii types of skewness are:

- Right skew (too called positive skew). A correct-skewed distribution is longer on the right side of its top than on its left.

- Left skew (also called negative skew). A left-skewed distribution is longer on the left side of its peak than on its right.

- Zero skew. It is symmetrical and its left and right sides are mirror images.

You lot tin employ the T.INV() function to find the critical value of t for one-tailed tests in Excel, and you tin use the T.INV.2T() function for two-tailed tests.

=T.INV.2T(0.05,29)

You can utilize the qt() function to observe the critical value of t in R. The part gives the critical value of t for the 1-tailed test. If you want the critical value of t for a 2-tailed test, divide the significance level by two.

qt(p = .025, df = 29)

There are three master types of missing data.

Missing completely at random (MCAR) data are randomly distributed across the variable and unrelated to other variables.

Missing at random (MAR) data are not randomly distributed but they are deemed for by other observed variables.

Missing not at random (MNAR) data systematically differ from the observed values.

To tidy up your missing information, your options commonly include accepting, removing, or recreating the missing data.

- Acceptance: You lot go out your data as is

- Listwise or pairwise deletion: Y'all delete all cases (participants) with missing data from analyses

- Imputation: You employ other data to fill in the missing data

There are 2 steps to computing the geometric hateful:

- Multiply all values together to get their product.

- Find the nth root of the production (n is the number of values).

Earlier calculating the geometric mean, notation that:

- The geometric mean can but be found for positive values.

- If any value in the information set up is zero, the geometric mean is zero.

The arithmetic mean is the virtually commonly used type of mean and is ofttimes referred to but every bit "the mean." While the arithmetic hateful is based on calculation and dividing values, the geometric hateful multiplies and finds the root of values.

Even though the geometric hateful is a less mutual measure of central tendency, it's more than authentic than the arithmetic mean for percentage alter and positively skewed information. The geometric mean is often reported for financial indices and population growth rates.

Outliers are extreme values that differ from most values in the dataset. You find outliers at the farthermost ends of your dataset.

Yous tin can choose from iv primary ways to detect outliers:

- Sorting your values from low to loftier and checking minimum and maximum values

- Visualizing your information with a box plot and looking for outliers

- Using the interquartile range to create fences for your data

- Using statistical procedures to identify extreme values

Correlation coefficients always range betwixt -one and ane.

The sign of the coefficient tells you the management of the human relationship: a positive value ways the variables modify together in the aforementioned management, while a negative value ways they change together in reverse directions.

The accented value of a number is equal to the number without its sign. The absolute value of a correlation coefficient tells you the magnitude of the correlation: the greater the accented value, the stronger the correlation.

There are various ways to improve power:

- Increase the potential effect size by manipulating your independent variable more strongly,

- Increase sample size,

- Increment the significance level (alpha),

- Reduce measurement error past increasing the precision and accuracy of your measurement devices and procedures,

- Use a one-tailed test instead of a two-tailed test for t tests and z tests.

A ability analysis is a calculation that helps you determine a minimum sample size for your report. Information technology'south made upwardly of four main components. If you know or have estimates for any iii of these, you can calculate the 4th component.

- Statistical power: the likelihood that a test will detect an upshot of a certain size if at that place is 1, usually set at 80% or higher.

- Sample size: the minimum number of observations needed to detect an consequence of a certain size with a given power level.

- Significance level (alpha): the maximum adventure of rejecting a true null hypothesis that you are willing to take, usually set at 5%.

- Expected event size: a standardized way of expressing the magnitude of the expected result of your study, usually based on similar studies or a pilot study.

The risk of making a Blazon I error is the significance level (or alpha) that yous choose. That's a value that you ready at the beginning of your study to assess the statistical probability of obtaining your results (p value).

The significance level is ordinarily set at 0.05 or v%. This means that your results only have a v% chance of occurring, or less, if the null hypothesis is really truthful.

To reduce the Blazon I error probability, y'all tin can set a lower significance level.

In statistics, ability refers to the likelihood of a hypothesis test detecting a true effect if there is one. A statistically powerful test is more likely to reject a false negative (a Type II error).

If yous don't ensure enough power in your study, you may not be able to notice a statistically meaning result fifty-fifty when it has practical significance. Your study might not have the ability to answer your research question.

There are dozens of measures of effect sizes. The almost common effect sizes are Cohen's d and Pearson's r. Cohen's d measures the size of the difference between two groups while Pearson's r measures the strength of the relationship betwixt 2 variables.

Issue size tells you how meaningful the relationship between variables or the difference between groups is.

A big effect size means that a research finding has practical significance, while a pocket-size effect size indicates express applied applications.

The standard error of the mean, or but standard error, indicates how different the population mean is likely to be from a sample hateful. Information technology tells you how much the sample mean would vary if you were to repeat a study using new samples from inside a single population.

To figure out whether a given number is a parameter or a statistic, inquire yourself the following:

- Does the number draw a whole, complete population where every member can exist reached for information collection?

- Is information technology possible to collect data for this number from every member of the population in a reasonable fourth dimension frame?

If the answer is yes to both questions, the number is likely to be a parameter. For pocket-size populations, data can be collected from the whole population and summarized in parameters.

If the reply is no to either of the questions, then the number is more likely to be a statistic.

The arithmetic mean is the about commonly used mean. It's often simply called the mean or the average. Only there are some other types of ways you can calculate depending on your research purposes:

- Weighted hateful: some values contribute more to the hateful than others.

- Geometric hateful: values are multiplied rather than summed up.

- Harmonic mean: reciprocals of values are used instead of the values themselves.

Y'all tin find the mean, or average, of a data set in two simple steps:

- Discover the sum of the values by adding them all up.

- Split up the sum by the number of values in the information set.

This method is the same whether you lot are dealing with sample or population data or positive or negative numbers.

The median is the about informative measure out of cardinal tendency for skewed distributions or distributions with outliers. For example, the median is frequently used every bit a measure of central trend for income distributions, which are generally highly skewed.

Because the median simply uses one or two values, it's unaffected by extreme outliers or non-symmetric distributions of scores. In contrast, the mean and mode can vary in skewed distributions.

A information fix can often take no fashion, one mode or more than than 1 mode – it all depends on how many different values repeat near frequently.

Your data can be:

- without any style

- unimodal, with one mode,

- bimodal, with two modes,

- trimodal, with three modes, or

- multimodal, with four or more modes.

To find the manner:

- If your information is numerical or quantitative, order the values from low to loftier.

- If it is categorical, sort the values by grouping, in any order.

Then yous simply demand to identify the most frequently occurring value.

The two most mutual methods for calculating interquartile range are the exclusive and inclusive methods.

The exclusive method excludes the median when identifying Q1 and Q3, while the inclusive method includes the median as a value in the data gear up in identifying the quartiles.

For each of these methods, you'll demand different procedures for finding the median, Q1 and Q3 depending on whether your sample size is even- or odd-numbered. The exclusive method works best for even-numbered sample sizes, while the inclusive method is frequently used with odd-numbered sample sizes.

Homoscedasticity, or homogeneity of variances, is an assumption of equal or like variances in dissimilar groups being compared.

This is an of import assumption of parametric statistical tests because they are sensitive to whatever dissimilarities. Uneven variances in samples result in biased and skewed test results.

The empirical rule, or the 68-95-99.7 dominion, tells yous where almost of the values lie in a normal distribution:

- Around 68% of values are inside one standard departure of the mean.

- Around 95% of values are within ii standard deviations of the hateful.

- Around 99.seven% of values are within 3 standard deviations of the hateful.

The empirical rule is a quick way to get an overview of your data and check for any outliers or extreme values that don't follow this pattern.

Variability tells yous how far apart points lie from each other and from the center of a distribution or a data fix.

Variability is likewise referred to as spread, besprinkle or dispersion.

While interval and ratio data can both exist categorized, ranked, and take equal spacing between next values, simply ratio scales have a true zero.

For case, temperature in Celsius or Fahrenheit is at an interval scale because cipher is not the lowest possible temperature. In the Kelvin scale, a ratio scale, nothing represents a total lack of thermal free energy.

A disquisitional value is the value of the test statistic which defines the upper and lower premises of a confidence interval, or which defines the threshold of statistical significance in a statistical test. It describes how far from the hateful of the distribution you have to go to comprehend a certain amount of the total variation in the information (i.east. 90%, 95%, 99%).

If you lot are amalgam a 95% conviction interval and are using a threshold of statistical significance of p = 0.05, then your critical value volition be identical in both cases.

A t-score (a.k.a. a t-value) is equivalent to the number of standard deviations away from the mean of the t-distribution.

The t-score is the test statistic used in t-tests and regression tests. It can also be used to describe how far from the hateful an ascertainment is when the data follow a t-distribution.

The t-distribution is a style of describing a prepare of observations where most observations fall close to the mean, and the rest of the observations make upward the tails on either side. It is a type of normal distribution used for smaller sample sizes, where the variance in the data is unknown.

The t-distribution forms a bong curve when plotted on a graph. It can be described mathematically using the mean and the standard deviation.

Ordinal data has two characteristics:

- The data tin can exist classified into dissimilar categories inside a variable.

- The categories have a natural ranked order.

However, unlike with interval information, the distances betwixt the categories are uneven or unknown.

Nominal information is information that can be labelled or classified into mutually exclusive categories within a variable. These categories cannot be ordered in a meaningful way.

For case, for the nominal variable of preferred mode of transportation, y'all may have the categories of car, charabanc, train, tram or bike.

If your confidence interval for a difference betwixt groups includes aught, that ways that if you run your experiment again y'all have a good chance of finding no departure between groups.

If your confidence interval for a correlation or regression includes cipher, that means that if you run your experiment again there is a proficient chance of finding no correlation in your data.

In both of these cases, you will also find a high p-value when y'all run your statistical test, meaning that your results could have occurred under the naught hypothesis of no relationship betwixt variables or no departure between groups.

The z-score and t-score (aka z-value and t-value) show how many standard deviations away from the mean of the distribution you are, assuming your data follow a z-distribution or a t-distribution.

These scores are used in statistical tests to testify how far from the mean of the predicted distribution your statistical judge is. If your test produces a z-score of 2.5, this means that your approximate is 2.5 standard deviations from the predicted hateful.

The predicted mean and distribution of your estimate are generated by the null hypothesis of the statistical exam you are using. The more standard deviations away from the predicted mean your estimate is, the less likely it is that the gauge could have occurred under the nothing hypothesis.

The confidence level is the pct of times you expect to become close to the same estimate if you run your experiment once again or resample the population in the same mode.

The confidence interval consists of the upper and lower bounds of the estimate yous expect to find at a given level of confidence.

For example, if yous are estimating a 95% confidence interval around the hateful proportion of female person babies built-in every twelvemonth based on a random sample of babies, you might find an upper bound of 0.56 and a lower bound of 0.48. These are the upper and lower bounds of the conviction interval. The confidence level is 95%.

Some variables have fixed levels. For example, gender and ethnicity are always nominal level data because they cannot be ranked.

However, for other variables, y'all can cull the level of measurement. For example, income is a variable that can be recorded on an ordinal or a ratio scale:

- At an ordinal level, you could create five income groupings and code the incomes that fall within them from ane–5.

- At a ratio level, you would record verbal numbers for income.

If you take a selection, the ratio level is ever preferable because you lot tin analyze information in more ways. The college the level of measurement, the more than precise your data is.

The alpha value, or the threshold for statistical significance, is capricious – which value you use depends on your subject field.

In virtually cases, researchers use an alpha of 0.05, which means that there is a less than v% chance that the data existence tested could have occurred under the null hypothesis.

P-values are usually automatically calculated by the plan you apply to perform your statistical test. They can also exist estimated using p-value tables for the relevant test statistic.

P-values are calculated from the zilch distribution of the test statistic. They tell you how oft a examination statistic is expected to occur under the aught hypothesis of the statistical test, based on where it falls in the null distribution.

If the test statistic is far from the mean of the nil distribution, and then the p-value will be small, showing that the test statistic is non likely to have occurred nether the null hypothesis.

The examination statistic will alter based on the number of observations in your data, how variable your observations are, and how stiff the underlying patterns in the information are.

For example, if one data set has higher variability while another has lower variability, the first data fix volition produce a test statistic closer to the null hypothesis, even if the truthful correlation betwixt two variables is the aforementioned in either data set.

In statistics, model selection is a process researchers use to compare the relative value of different statistical models and determine which one is the best fit for the observed data.

The Akaike information benchmark is one of the virtually common methods of model option. AIC weights the power of the model to predict the observed data confronting the number of parameters the model requires to reach that level of precision.

AIC model selection can help researchers find a model that explains the observed variation in their data while avoiding overfitting.

The Akaike data criterion is calculated from the maximum log-likelihood of the model and the number of parameters (K) used to attain that likelihood. The AIC function is 2K – 2(log-likelihood).

Lower AIC values indicate a ameliorate-fit model, and a model with a delta-AIC (the difference betwixt the two AIC values being compared) of more than -2 is considered significantly improve than the model it is being compared to.

A factorial ANOVA is any ANOVA that uses more than one chiselled independent variable. A 2-style ANOVA is a type of factorial ANOVA.

Some examples of factorial ANOVAs include:

- Testing the combined furnishings of vaccination (vaccinated or not vaccinated) and wellness status (healthy or pre-existing condition) on the rate of flu infection in a population.

- Testing the effects of marital status (married, unmarried, divorced, widowed), chore condition (employed, self-employed, unemployed, retired), and family history (no family history, some family unit history) on the incidence of low in a population.

- Testing the effects of feed type (type A, B, or C) and barn crowding (not crowded, somewhat crowded, very crowded) on the concluding weight of chickens in a commercial farming performance.

In ANOVA, the nix hypothesis is that there is no departure among group means. If whatever group differs significantly from the overall group mean, then the ANOVA will report a statistically significant consequence.

Pregnant differences amongst group means are calculated using the F statistic, which is the ratio of the mean sum of squares (the variance explained past the independent variable) to the mean foursquare error (the variance left over).

If the F statistic is higher than the critical value (the value of F that corresponds with your alpha value, usually 0.05), then the difference among groups is deemed statistically significant.

The but difference between one-mode and two-fashion ANOVA is the number of independent variables. A i-manner ANOVA has one contained variable, while a two-way ANOVA has two.

- Ane-fashion ANOVA: Testing the relationship between shoe brand (Nike, Adidas, Saucony, Hoka) and race finish times in a marathon.

- 2-manner ANOVA: Testing the human relationship between shoe brand (Nike, Adidas, Saucony, Hoka), runner age group (junior, senior, chief'southward), and race finishing times in a marathon.

All ANOVAs are designed to examination for differences among three or more groups. If yous are only testing for a divergence betwixt 2 groups, utilize a t-test instead.

Linear regression virtually often uses hateful-foursquare error (MSE) to calculate the error of the model. MSE is calculated by:

- measuring the distance of the observed y-values from the predicted y-values at each value of 10;

- squaring each of these distances;

- calculating the mean of each of the squared distances.

Linear regression fits a line to the data by finding the regression coefficient that results in the smallest MSE.

Simple linear regression is a regression model that estimates the relationship between one independent variable and one dependent variable using a direct line. Both variables should be quantitative.

For instance, the relationship between temperature and the expansion of mercury in a thermometer can be modeled using a direct line: as temperature increases, the mercury expands. This linear human relationship is so certain that we can use mercury thermometers to measure out temperature.

A regression model is a statistical model that estimates the human relationship between one dependent variable and 1 or more independent variables using a line (or a plane in the case of 2 or more independent variables).

A regression model tin be used when the dependent variable is quantitative, except in the case of logistic regression, where the dependent variable is binary.

A one-sample t-test is used to compare a unmarried population to a standard value (for example, to determine whether the average lifespan of a specific town is different from the land average).

A paired t-examination is used to compare a unmarried population before and afterward some experimental intervention or at two different points in fourth dimension (for case, measuring student performance on a test before and after being taught the material).

A t-examination measures the departure in group means divided by the pooled standard mistake of the two group ways.

In this way, it calculates a number (the t-value) illustrating the magnitude of the difference betwixt the 2 group means beingness compared, and estimates the likelihood that this departure exists purely past chance (p-value).

Your option of t-examination depends on whether y'all are studying ane group or two groups, and whether yous care about the direction of the deviation in group ways.

If you are studying ane group, use a paired t-test to compare the group mean over time or later an intervention, or use a one-sample t-exam to compare the group mean to a standard value. If you are studying two groups, use a ii-sample t-examination.

If you lot want to know only whether a deviation exists, use a two-tailed test. If y'all want to know if ane group mean is greater or less than the other, use a left-tailed or correct-tailed one-tailed test.

Statistical significance is a term used by researchers to land that it is unlikely their observations could have occurred nether the nada hypothesis of a statistical test. Significance is usually denoted by a p-value, or probability value.

Statistical significance is arbitrary – it depends on the threshold, or alpha value, chosen by the researcher. The about common threshold is p < 0.05, which ways that the data is likely to occur less than 5% of the fourth dimension nether the nada hypothesis.

When the p-value falls below the chosen blastoff value, and so nosotros say the result of the test is statistically significant.

Can Interquartile Range Be Negative,

Source: https://www.scribbr.com/frequently-asked-questions/can-the-range-be-a-negative-number/

Posted by: smithupyrairow.blogspot.com

0 Response to "Can Interquartile Range Be Negative"

Post a Comment